Over the course of FY20, spanning from June 1, 2021, through May 31, 2022, the Maryland-National Capital Region Emergency Response System continued to assist stakeholders with enhancing response capabilities through the provision of plan and policy development, training and exercise development and deliver, and equipment acquisition to support the missions of partner agencies. Throughout this period of performance, MDERS oversaw the implementation and/or expansion of ten unique capabilities:

- Ballistic Protection for Fire/Rescue/EMS Personnel

- Emergency Management Response and Recovery Professional Services

- Incident Command Tools

- Innovation Fund

- Law Enforcement Special Events Response

- Mass Casualty Incident Support

- Medical Resource Officers

- Public Access Trauma Care (PATC)

- Tactical Equipment for Law Enforcement

- Training and Exercise Program

MDERS approached each of these programs through a comprehensive capability development process that builds, implements, and sustains emergency response capabilities. Through this process, with the guidance of its Steering Committee and support of its partner agencies, MDERS continues to pursue its ultimate vision of integrating and optimizing all capabilities to provide superior service to the near two million residents of Montgomery and Prince George’s Counties.

An overview of each program supported in FY20 is provided in the following sections.*

Ballistic Protection for Fire/Rescue/EMS Personnel

To better protect fire/rescue/EMS personnel that may deploy to provide medical care in a “warm” or “hot” zone during an active threat event, MDERS procured a variety of protective equipment for Montgomery County Fire and Rescue Service (MCFRS) and Prince George’s County Fire and EMS Department (PGFD). This equipment includes ballistic-rated body armor, armor plate carriers, and both ballistic-rated and non-ballistic eye and face protection. Additionally, MDERS provided modular medical supplies that mount to armored plate carriers, including shears, tactical emergency casualty care supplies, triage tape, flashlights, and litters that may be used to transport patients or injured responders to a casualty collection point.

To better protect fire/rescue/EMS personnel that may deploy to provide medical care in a “warm” or “hot” zone during an active threat event, MDERS procured a variety of protective equipment for Montgomery County Fire and Rescue Service (MCFRS) and Prince George’s County Fire and EMS Department (PGFD). This equipment includes ballistic-rated body armor, armor plate carriers, and both ballistic-rated and non-ballistic eye and face protection. Additionally, MDERS provided modular medical supplies that mount to armored plate carriers, including shears, tactical emergency casualty care supplies, triage tape, flashlights, and litters that may be used to transport patients or injured responders to a casualty collection point.

Emergency Management Response and Recovery Professional Services

MDERS continued its support of the diverse missions and functions of the Montgomery County Office of Homeland Security and Emergency Management (OEMHS) and the Prince George’s County Office of Emergency Management (OEM) through the provision of professional services. In Montgomery County, MDERS funds a full-time employee that supports OEMHS’s emergency management and volunteer and donations management, as well as the provision of funding for as-needed contract support through the University of Maryland Center for Health and Homeland Security. In Prince George’s County, MDERS funds four full-time employees. One dedicated to planning, one dedicated to training and exercises, and two dedicated to volunteer and donations management.

Incident Command Tools

To expand and enhance the incident command capability for use by current and future incident commanders in the public safety community, MDERS procured a variety of equipment, field reference materials, and software. These investments include the provision of video cameras, monitors, and other hardware and software that supports the Command Competency Labs, local training resources which allows incident commanders to train in immersive, simulated environments. Additionally, MDERS designed and provided hard-copy command guides for the Prince George’s County Police Department (PGPD) to assist officers in establishing and maintaining incident command to a variety of common events.

Innovation Fund

In FY20, MDERS implemented the Emerging Homeland Security Pilot program, otherwise known as the “Innovation Fund.” Through the Innovation Fund, stakeholder agencies are able to apply to procure, implement, and evaluate novel solutions to address emerging response challenges. After receiving and evaluating the specific technology, stakeholder agencies can then assess whether to proceed with further investments and operationalization. Some of the technology piloted through the Innovation Fund over the past year include:

In FY20, MDERS implemented the Emerging Homeland Security Pilot program, otherwise known as the “Innovation Fund.” Through the Innovation Fund, stakeholder agencies are able to apply to procure, implement, and evaluate novel solutions to address emerging response challenges. After receiving and evaluating the specific technology, stakeholder agencies can then assess whether to proceed with further investments and operationalization. Some of the technology piloted through the Innovation Fund over the past year include:

- Augmented Training Systems (ATS) Virtual Reality provides MCFRS a portable solution to mass casualty triage training through the Oculus virtual reality headset.

- MyEOP Mobile Application provides Region V healthcare systems with a mobile application that serves as a document repository for critical response plans, reference information, or other documentation that can be accessed from any mobile device.

- Leader Search Bluetooth Listening Devices provide MCFRS’s and PGFD’s structural collapse rescue teams with a rapidly deployed sensor to listen for trapped victims.

- PerSim Augmented Reality Patient Assessment Training System provides MCFRS with an interactive patient assessment training tool utilizing the Microsoft Hololens system, which can project injuries, as well as responses to patient care, on top of live patients or mannequins.

- Situational Awareness Cameras for Law Enforcement Armored Vehicles provide MCPD with vehicle-mounted cameras that can stream real-time footage of an incident scene back to the incident command post.

- Structural Collapse Training Mannequins provide structural collapse and search and rescue teams with high fidelity patient simulators that can easily be transported to different training sites, but are durable enough for austere conditions.

Law Enforcement Special Events Response

MDERS supported the continued implementation of the Montgomery County Police Department’s (MCPD) and PGPD’s public order/civil disturbance capability through the procurement and provision of personal protective equipment (PPE) and specialized training for both departments Level 1 response teams. Officers equipped with this PPE and the knowledge, skills, and abilities provided during the Level 1 training enable MCPD and PGPD to respond to large-scale civil disturbance events with an operational posture informed by the most modern standards developed across the United States and Europe.

Mass Casualty Incident Support

MDERS continued its goal to better prepare its partners to triage, treat, and transport victims of a mass casualty incident through the procurement of a mobile mass casualty incident support cache. At the core of this cache, a 26’ Ford F650 box truck that will be appropriately outfitted with necessary medical equipment and supplies by the Region V Healthcare Coalition. Available to any of the six major healthcare systems in Montgomery and Prince George’s County, this cache serves as an on-demand resource that will deploy and support local healthcare facilities during an acute surge that exceeds existing capacity.

MDERS continued its goal to better prepare its partners to triage, treat, and transport victims of a mass casualty incident through the procurement of a mobile mass casualty incident support cache. At the core of this cache, a 26’ Ford F650 box truck that will be appropriately outfitted with necessary medical equipment and supplies by the Region V Healthcare Coalition. Available to any of the six major healthcare systems in Montgomery and Prince George’s County, this cache serves as an on-demand resource that will deploy and support local healthcare facilities during an acute surge that exceeds existing capacity.

Medical Resource Officers

MDERS funded two, full-time medical resource officers (MROs), one each in Montgomery and Prince George’s County, to bolster public health emergency preparedness and response capabilities. These MROs lead the coordination of the local Medical Reserve Corps (MRC) volunteers in both counties, including the recruitment, credentialing, planning, training, exercising, and deployment of volunteers. Through the coordination and oversight by the MROs, the county MRCs aim to strengthen individual, community, and workplace preparedness in the Maryland-National Capital Region. In both Montgomery and Prince George’s County, the MROs and the MRC that they oversee instrumentally supported ongoing COVID-19 response efforts, including operating call centers, conducting surveillance efforts, and supporting testing and vaccination sites.

MDERS funded two, full-time medical resource officers (MROs), one each in Montgomery and Prince George’s County, to bolster public health emergency preparedness and response capabilities. These MROs lead the coordination of the local Medical Reserve Corps (MRC) volunteers in both counties, including the recruitment, credentialing, planning, training, exercising, and deployment of volunteers. Through the coordination and oversight by the MROs, the county MRCs aim to strengthen individual, community, and workplace preparedness in the Maryland-National Capital Region. In both Montgomery and Prince George’s County, the MROs and the MRC that they oversee instrumentally supported ongoing COVID-19 response efforts, including operating call centers, conducting surveillance efforts, and supporting testing and vaccination sites.

Public Access Trauma Care (PATC)

MDERS continued the expansion of the PATC capability across Montgomery and Prince George’s Counties. Designed to empower bystanders with the knowledge, skills, abilities, and supplies to deliver immediate medical care prior to the arrival of first responders, the PATC program deploys the equipment and training necessary to common injuries associated with life-threatening trauma. Over the past year, the PATC program provided a cache of 84 training kits to Montgomery County Public Schools (MCPS) for the continued delivery of training and education to students and faculty in the county. In Prince George’s County, MDERS procured 842 cabinets and five-pack kits that will be mounted in government buildings and public schools.

MDERS continued the expansion of the PATC capability across Montgomery and Prince George’s Counties. Designed to empower bystanders with the knowledge, skills, abilities, and supplies to deliver immediate medical care prior to the arrival of first responders, the PATC program deploys the equipment and training necessary to common injuries associated with life-threatening trauma. Over the past year, the PATC program provided a cache of 84 training kits to Montgomery County Public Schools (MCPS) for the continued delivery of training and education to students and faculty in the county. In Prince George’s County, MDERS procured 842 cabinets and five-pack kits that will be mounted in government buildings and public schools.

Tactical Equipment for Law Enforcement

MDERS remains a critical partner in supporting MCPD’s and PGPD’s Special Weapons and Tactics (SWAT) team members. Over the past year, MDERS helped MCPD procure a variety of equipment for its SWAT team, including thermal imaging technology, night vision goggles, long-range targeting camera systems, ballistic shields, bomb disposal robotics, training supplies, cold weather gear, and upfitting response vehicles with essential tools and equipment. Simultaneously, MDERS provided PGPD’s SWAT team members with bomb disposal robotics, gas masks, and the funding necessary to refurbish armored vehicles. Through these investments, MDERS supported MCPD’s and PGPD’s ability to expeditiously, effectively, and efficiently respond to and mitigate a variety of high-threat scenarios.

MDERS remains a critical partner in supporting MCPD’s and PGPD’s Special Weapons and Tactics (SWAT) team members. Over the past year, MDERS helped MCPD procure a variety of equipment for its SWAT team, including thermal imaging technology, night vision goggles, long-range targeting camera systems, ballistic shields, bomb disposal robotics, training supplies, cold weather gear, and upfitting response vehicles with essential tools and equipment. Simultaneously, MDERS provided PGPD’s SWAT team members with bomb disposal robotics, gas masks, and the funding necessary to refurbish armored vehicles. Through these investments, MDERS supported MCPD’s and PGPD’s ability to expeditiously, effectively, and efficiently respond to and mitigate a variety of high-threat scenarios.

Training and Exercise Program

MDERS’s Training and Exercise program offers numerous opportunities for stakeholders to develop and enhance capabilities through in-person, virtual, and hybrid curricula. These offerings range from highly specialized tactical trainings to policy-level and leadership theory. These events include:

MDERS’s Training and Exercise program offers numerous opportunities for stakeholders to develop and enhance capabilities through in-person, virtual, and hybrid curricula. These offerings range from highly specialized tactical trainings to policy-level and leadership theory. These events include:

- Advanced Law Enforcement Rapid Response Training (ALERRT) Conference: This conference focuses on integrated response topics for law enforcement, fire/rescue/EMS personnel, medical providers, and emergency management professionals that may be involved in active threat response operations.

- Advanced Strategic Public Order Command: This course instructs students on a variety of critical concepts necessary for effective Public Order Command and Control, including the role of law enforcement during protests or disorder, command structures, strategy, tactical planning and decision making, and overarching responsibilities.

- Anatomy Gift Registry Lab: This training teaches emergency medical technicians and paramedics the necessary knowledge, skills, and abilities outlined in the Maryland Medical Protocol and allows students to apply those skills on real tissue.

- Assessment and Training Solutions Consulting Corporation (ATSCC) Tactical Emergency Casualty Care (TECC) Live Tissue Class: This class instructs tactical law enforcement personnel and other special operations responders with a practical procedures and skills laboratory, as well as a simulated mass casualty incident exercise scenario to practice self-aid and buddy-aid in a high-threat environment.

- Direct Action Resource Center (DARC) Advanced Sniper Integration Course: This course instructs sniper and observer teams on the skills and tactics necessary to provide support for tactical operations in complex environments or large venues under a variety of conditions, ranges, visibility, and target types.

- DARC Level 1 Training: This course provides law enforcement officers with the knowledge of tactical leadership, terrain analysis, and planning methodologies to combat a coordinated, multi-cell attack within their jurisdiction.

- DARC Level 2 Training: This course expands upon the Level 1 training through the use of life-fire training, explosive and ballistic breaching, and sniper/observer support to help law enforcement officers combat complex, multi-cell attack within their jurisdiction.

- DARC Close Quarters Battle/TECC: This course integrates emergency medicine, tactical emergency casualty care, explosive and ballistic breaching, live-fire training, and force-on-force training across a variety of high threat scenarios including active shooters, high risk warrants, barricades, hostage rescue, and complex coordinated terrorist attacks.

- DARC Night Vision Instructor Course: This train-the-trainer course instructs law enforcement training officers on the deployment, integration, limitations, and considerations of night vision technology to bring back to their local departments and create in-house training programs for other law enforcement personnel.

- DeconTect Train-the-Trainer Decontamination Training: This train-the-trainer course provides training officers for public safety agencies with the knowledge, skills, and abilities to develop standard operating procedures and in-house training programs for their department for various decontamination scenarios including cold weather decon, post-fire decon, disinfection, and low-footprint decon.

- EMT Tactical Basic Course: This course encompasses a nationally standardized curriculum and certification for EMTs, paramedics, and physicians that operate as part of a law enforcement special response team.

- Federal Aviation Administration (FAA) Unmanned Aerial Systems (UAS) Symposium: This virtual conference offers participants the opportunity to engage across multi-disciplinary government agencies and industry experts about the regulations, ongoing research, and current initiatives pertaining to the use of UAS within the National Airspace System, including safety, remote identification, regulations, flight times, and beyond visual line of sight operations.

- Federal Bureau of Investigation (FBI) Law Enforcement Executive Development Association (LEEDA) Command Leadership Training: This training prepares law enforcement leaders for command level positions through a variety of topics including command responsibility, discipline and liability, team building, resilient leadership, and leading change within an organization.

- Fire Department Instructors Conference (FDIC) International Annual Conference: This conference provides fire/rescue professionals from around the globe with the opportunity to learn directly from instructors, in classrooms, during workshops, with hands-on-training, and novel technology offerings that cover a breadth of topics across the fire/rescue industry.

- First Receiver Operations Training (FROT): This course educates first responders and first receivers on the lifesaving skills necessary to identify, triage, treat, and decontaminate victims exposed to chemical, biological, radiological, nuclear, or other hazardous materials in compliance with Centers for Disease Control and Prevention and Occupational Safety and Health Administration guidelines.

- FireStats: This class provides students with an enhanced understanding of statistics and decision sciences as they pertain to the fire/rescue/EMS industry, specifically in deployment analysis, data presentation, and resource planning.

- Gracie Survival Tactics Level I Course: This course teaches students 23 standing and ground-based defensive techniques that address the most common situations which may threaten law enforcement officers.

- High Angle Sniper Course: This course provides law enforcement officers with the knowledge, skills, and abilities to conduct sniper missions and precision shooting from elevated surfaces in both urban and rural environments.

- High Performance Leadership Academy: This course provides public sector leaders and decision-makers with an interactive online learning platform, which combines real-time webinars, recorded sessions, and interactive small group discussions, to enhance students’ abilities to conduct five key skills: leading, organizing, collaborating, communicating, and delivering.

- International Association of Emergency Managers (IAEM) Annual Conference: This conference engages emergency management professionals across all levels of government and private sector organizations on contemporary topics across the emergency management enterprise.

- Louisiana State University Homeland Security Specialist MicroCert: This program encompasses a variety of topics within the homeland security enterprise, including collaboration between homeland security and law enforcement in a post 9/11 environment, the tradecraft of modern terrorism, intelligence, multi-agency partnerships, and public-private partnerships.

- Massachusetts Institute of Technology (MIT) Crisis Management and Business Resiliency Course: This course provides emergency management professionals with a combination of lecture-based learning, case studies, and interactive activities to examine modern crisis management response, including the COVID-19 pandemic.

- Master Tactical Breacher Course: This course provides law enforcement officers with a comprehensive understanding of breaching techniques and their application across multiple environments, including explosive, manual, mechanical, ballistic, thennal, and hydraulic breaching methodologies.

- MDERS Annual Symposium: This virtual symposium fosters creativity and innovation to address the numerous complexities faced by emergency response organizations and the homeland security enterprise, including climate change and its impact on critical infrastructure, pandemic response, cyber threats to public safety, rising violent extremism, and other ongoing threats.

- MDERS Cybersecurity Workshop: This workshop provides participants with an understanding of how their organization can better prepare for and respond to a cyber incident. The course combines both an instruction on key cybersecurity concepts and allows participants to directly apply those concepts in an interactive setting.

- National Association of County and City Health Officials (NAACHO) Preparedness Summit: This summit engages public health officials on a variety of topics including leadership and workforce, strategic partnerships, flexible and sustainable funding, data analysis, and foundational infrastructure.

- National Association of Emergency Medical Services Physicians (NAEMSP) Annual Meeting: This conference provides EMS medical directors with the opportunity to learn from medical experts in specialized fields and enhance their knowledge in scientific and technological advancements in the EMS field.

- National Homeland Security Association (NHSA) National Homeland Security Conference (NHSC): This conference helps personnel from various emergency disciplines to identify emerging homeland security threats and shares new technologies to enhance operational response efforts.

- National Preparedness Leadership Initiative (NPLI) Virtual Seminar Series: Transformational Connectivity: This seminar series brings together leaders from across the emergency response spectrum to impart the tools and techniques of Meta-Leadership to help foster a connective and high-performance work environment.

- Pinnacle Conference: This conference engages EMS leaders to adapt to the changing environment of emergency medical services through thought provoking lectures and smaller educational sessions.

- Resilient Virginia 2021 Conference: This conference delivers the tools, informational resources, and relationship building opportunities for attendees to build resiliency in their communities, help address climate change, and overcome social challenges throughout the process.

- Rigging Lab Academy: This online course provides fire/rescue personnel with detailed instruction on objectives, strategies, and techniques used to enhance technical rescue, search and rescue, and other rope rescue programs.

- Shooting, Hunting, Outdoor Trade (SHOT) Show: This conference provides attendees with an opportunity to learn from, observe demonstrations, and evaluate evolving technology from military, law enforcement, and other tactical vendors.

- Sniper Team Leader Course: This course supports sniper team leaders by reviewing and adjusting the team’s current programs and training requirements, ensuring proper documentation of training and operations, evaluating the team’s current supply inventory, and preparing each team for numerous response deployments.

- Special Operations Medical Association (SOMA) Scientific Assembly (SOMSA) Conference: This conference enhances the medical capabilities of special operation medical providers through lectures and educational opportunities provided by medical professionals and civilian partners.

- SWAT Command Decision-Making and Level 1 Course: This course provides SWAT team leaders with the necessary skills to effectively confront a multitude of different emergencies and prepare for every phase of the response including planning, negotiations, operational maneuvers, media engagement, and debriefs.

- Tomahawk Fundamentals of Close Quarters Combat: This course provides law enforcement personnel with the best practices, techniques, and procedures for close quarter operations through simulated exercises and classroom education.

*A detailed synopsis and budgetary breakdown of each of these programs will be provided in the FY20 MDERS Annual Report over the coming months. This report will be available at www.mders.org.

MDERS partnered with MCPS to configure the training kits to best meet the needs of Montgomery County high schools. To ensure students are prepared to deploy a PATC kit in a real-world situation, each training kit contains a variety of equipment mirroring the supplies an individual may find in the PATC kits installed throughout MCPS. Each high school received three training kits comprised of the following supplies:

MDERS partnered with MCPS to configure the training kits to best meet the needs of Montgomery County high schools. To ensure students are prepared to deploy a PATC kit in a real-world situation, each training kit contains a variety of equipment mirroring the supplies an individual may find in the PATC kits installed throughout MCPS. Each high school received three training kits comprised of the following supplies:

Originally published in 2002 by the Federal Emergency Management Agency (FEMA), HSEEP establishes guidelines for the development and implementation of effective exercise programs. HSEEP outlines key guidelines in five components of the exercise cycle: program management, design and development, conduct, evaluation, and improvement planning. These guidelines ensure a consistent methodology across jurisdictions and agencies, while remaining adaptable to individual organizations’ needs. In 2020, FEMA released an update to HSEEP, reflecting feedback solicited from the exercise community. Key changes to the 2020 HSEEP updates include: increased inclusivity of the whole community, additional tools and training resources for exercise design, application of “SMART” methodology to improvement planning, and the implementation of an Integrated Preparedness Plan (IPP) to replace the Multi-Year Training and Exercise Plan (MyTEP).*

Originally published in 2002 by the Federal Emergency Management Agency (FEMA), HSEEP establishes guidelines for the development and implementation of effective exercise programs. HSEEP outlines key guidelines in five components of the exercise cycle: program management, design and development, conduct, evaluation, and improvement planning. These guidelines ensure a consistent methodology across jurisdictions and agencies, while remaining adaptable to individual organizations’ needs. In 2020, FEMA released an update to HSEEP, reflecting feedback solicited from the exercise community. Key changes to the 2020 HSEEP updates include: increased inclusivity of the whole community, additional tools and training resources for exercise design, application of “SMART” methodology to improvement planning, and the implementation of an Integrated Preparedness Plan (IPP) to replace the Multi-Year Training and Exercise Plan (MyTEP).*

DARC offers curriculum designed to build in complexity as students progress into more advanced tactics. DARC requires all students to complete two pre-requisite courses prior to enrolling in more advanced offerings. These pre-requisites are identified and summarized below.

DARC offers curriculum designed to build in complexity as students progress into more advanced tactics. DARC requires all students to complete two pre-requisite courses prior to enrolling in more advanced offerings. These pre-requisites are identified and summarized below. After completing the LECTC-1 and LECTC-2 prerequisites, students may enroll in specialized response areas, such as advanced operational breaching, advanced sniper integration, and tactical night vision instruction. These courses provide operators with the knowledge and tools required to lead a breaching team, integrate a sniper and observer team in tactical operations, deploy during large venue counter-terrorism operations, and conduct operations using night-vision capabilities. The specific courses attended by PGPD since 2014 are identified and summarized below.

After completing the LECTC-1 and LECTC-2 prerequisites, students may enroll in specialized response areas, such as advanced operational breaching, advanced sniper integration, and tactical night vision instruction. These courses provide operators with the knowledge and tools required to lead a breaching team, integrate a sniper and observer team in tactical operations, deploy during large venue counter-terrorism operations, and conduct operations using night-vision capabilities. The specific courses attended by PGPD since 2014 are identified and summarized below.

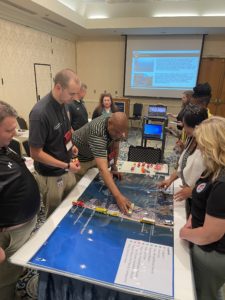

Between February and April 2022, MCPD planned and conducted four tabletop exercises for MCPD executive staff with support from the Maryland-National Capital Region Emergency Response System (MDERS). These exercises assessed MCPD executive staff’s ability to manage the first 30-60 minutes of a major incident, including phases one and two of their response process, while reinforcing participants knowledge and understanding of MCPD directives, policies, and procedures.

Between February and April 2022, MCPD planned and conducted four tabletop exercises for MCPD executive staff with support from the Maryland-National Capital Region Emergency Response System (MDERS). These exercises assessed MCPD executive staff’s ability to manage the first 30-60 minutes of a major incident, including phases one and two of their response process, while reinforcing participants knowledge and understanding of MCPD directives, policies, and procedures.

To better protect fire/rescue/EMS personnel that may deploy to provide medical care in a “warm” or “hot” zone during an active threat event, MDERS procured a variety of protective equipment for Montgomery County Fire and Rescue Service (MCFRS) and Prince George’s County Fire and EMS Department (PGFD). This equipment includes ballistic-rated body armor, armor plate carriers, and both ballistic-rated and non-ballistic eye and face protection. Additionally, MDERS provided modular medical supplies that mount to armored plate carriers, including shears, tactical emergency casualty care supplies, triage tape, flashlights, and litters that may be used to transport patients or injured responders to a casualty collection point.

To better protect fire/rescue/EMS personnel that may deploy to provide medical care in a “warm” or “hot” zone during an active threat event, MDERS procured a variety of protective equipment for Montgomery County Fire and Rescue Service (MCFRS) and Prince George’s County Fire and EMS Department (PGFD). This equipment includes ballistic-rated body armor, armor plate carriers, and both ballistic-rated and non-ballistic eye and face protection. Additionally, MDERS provided modular medical supplies that mount to armored plate carriers, including shears, tactical emergency casualty care supplies, triage tape, flashlights, and litters that may be used to transport patients or injured responders to a casualty collection point. In FY20, MDERS implemented the Emerging Homeland Security Pilot program, otherwise known as the “Innovation Fund.” Through the Innovation Fund, stakeholder agencies are able to apply to procure, implement, and evaluate novel solutions to address emerging response challenges. After receiving and evaluating the specific technology, stakeholder agencies can then assess whether to proceed with further investments and operationalization. Some of the technology piloted through the Innovation Fund over the past year include:

In FY20, MDERS implemented the Emerging Homeland Security Pilot program, otherwise known as the “Innovation Fund.” Through the Innovation Fund, stakeholder agencies are able to apply to procure, implement, and evaluate novel solutions to address emerging response challenges. After receiving and evaluating the specific technology, stakeholder agencies can then assess whether to proceed with further investments and operationalization. Some of the technology piloted through the Innovation Fund over the past year include: MDERS continued its goal to better prepare its partners to triage, treat, and transport victims of a mass casualty incident through the procurement of a mobile mass casualty incident support cache. At the core of this cache, a 26’ Ford F650 box truck that will be appropriately outfitted with necessary medical equipment and supplies by the Region V Healthcare Coalition. Available to any of the six major healthcare systems in Montgomery and Prince George’s County, this cache serves as an on-demand resource that will deploy and support local healthcare facilities during an acute surge that exceeds existing capacity.

MDERS continued its goal to better prepare its partners to triage, treat, and transport victims of a mass casualty incident through the procurement of a mobile mass casualty incident support cache. At the core of this cache, a 26’ Ford F650 box truck that will be appropriately outfitted with necessary medical equipment and supplies by the Region V Healthcare Coalition. Available to any of the six major healthcare systems in Montgomery and Prince George’s County, this cache serves as an on-demand resource that will deploy and support local healthcare facilities during an acute surge that exceeds existing capacity. MDERS funded two, full-time medical resource officers (MROs), one each in Montgomery and Prince George’s County, to bolster public health emergency preparedness and response capabilities. These MROs lead the coordination of the local Medical Reserve Corps (MRC) volunteers in both counties, including the recruitment, credentialing, planning, training, exercising, and deployment of volunteers. Through the coordination and oversight by the MROs, the county MRCs aim to strengthen individual, community, and workplace preparedness in the Maryland-National Capital Region. In both Montgomery and Prince George’s County, the MROs and the MRC that they oversee instrumentally supported ongoing COVID-19 response efforts, including operating call centers, conducting surveillance efforts, and supporting testing and vaccination sites.

MDERS funded two, full-time medical resource officers (MROs), one each in Montgomery and Prince George’s County, to bolster public health emergency preparedness and response capabilities. These MROs lead the coordination of the local Medical Reserve Corps (MRC) volunteers in both counties, including the recruitment, credentialing, planning, training, exercising, and deployment of volunteers. Through the coordination and oversight by the MROs, the county MRCs aim to strengthen individual, community, and workplace preparedness in the Maryland-National Capital Region. In both Montgomery and Prince George’s County, the MROs and the MRC that they oversee instrumentally supported ongoing COVID-19 response efforts, including operating call centers, conducting surveillance efforts, and supporting testing and vaccination sites. MDERS continued the expansion of the PATC capability across Montgomery and Prince George’s Counties. Designed to empower bystanders with the knowledge, skills, abilities, and supplies to deliver immediate medical care prior to the arrival of first responders, the PATC program deploys the equipment and training necessary to common injuries associated with life-threatening trauma. Over the past year, the PATC program provided a cache of 84 training kits to Montgomery County Public Schools (MCPS) for the continued delivery of training and education to students and faculty in the county. In Prince George’s County, MDERS procured 842 cabinets and five-pack kits that will be mounted in government buildings and public schools.

MDERS continued the expansion of the PATC capability across Montgomery and Prince George’s Counties. Designed to empower bystanders with the knowledge, skills, abilities, and supplies to deliver immediate medical care prior to the arrival of first responders, the PATC program deploys the equipment and training necessary to common injuries associated with life-threatening trauma. Over the past year, the PATC program provided a cache of 84 training kits to Montgomery County Public Schools (MCPS) for the continued delivery of training and education to students and faculty in the county. In Prince George’s County, MDERS procured 842 cabinets and five-pack kits that will be mounted in government buildings and public schools. MDERS remains a critical partner in supporting MCPD’s and PGPD’s Special Weapons and Tactics (SWAT) team members. Over the past year, MDERS helped MCPD procure a variety of equipment for its SWAT team, including thermal imaging technology, night vision goggles, long-range targeting camera systems, ballistic shields, bomb disposal robotics, training supplies, cold weather gear, and upfitting response vehicles with essential tools and equipment. Simultaneously, MDERS provided PGPD’s SWAT team members with bomb disposal robotics, gas masks, and the funding necessary to refurbish armored vehicles. Through these investments, MDERS supported MCPD’s and PGPD’s ability to expeditiously, effectively, and efficiently respond to and mitigate a variety of high-threat scenarios.

MDERS remains a critical partner in supporting MCPD’s and PGPD’s Special Weapons and Tactics (SWAT) team members. Over the past year, MDERS helped MCPD procure a variety of equipment for its SWAT team, including thermal imaging technology, night vision goggles, long-range targeting camera systems, ballistic shields, bomb disposal robotics, training supplies, cold weather gear, and upfitting response vehicles with essential tools and equipment. Simultaneously, MDERS provided PGPD’s SWAT team members with bomb disposal robotics, gas masks, and the funding necessary to refurbish armored vehicles. Through these investments, MDERS supported MCPD’s and PGPD’s ability to expeditiously, effectively, and efficiently respond to and mitigate a variety of high-threat scenarios. MDERS’s Training and Exercise program offers numerous opportunities for stakeholders to develop and enhance capabilities through in-person, virtual, and hybrid curricula. These offerings range from highly specialized tactical trainings to policy-level and leadership theory. These events include:

MDERS’s Training and Exercise program offers numerous opportunities for stakeholders to develop and enhance capabilities through in-person, virtual, and hybrid curricula. These offerings range from highly specialized tactical trainings to policy-level and leadership theory. These events include:

MDERS staff coordinated with officials from Montgomery College to strategize the expansion of the PATC capability into their facilities. As part of an expedited planning process, MDERS staff and Montgomery College officials walked through the Rockville campus to identify potential locations for the PATC kits and complete the necessary Environmental and Historic Preservation (EHP) paperwork. Montgomery College officials then repeated this process at their Germantown campus, Takoma Park campus, and Central Services facility.

MDERS staff coordinated with officials from Montgomery College to strategize the expansion of the PATC capability into their facilities. As part of an expedited planning process, MDERS staff and Montgomery College officials walked through the Rockville campus to identify potential locations for the PATC kits and complete the necessary Environmental and Historic Preservation (EHP) paperwork. Montgomery College officials then repeated this process at their Germantown campus, Takoma Park campus, and Central Services facility. Upon completion of all the necessary EHP documentation and paperwork, MDERS supplied Montgomery College with PATC bags and cabinets, which Montgomery College promptly installed in 90 locations across their facilities. Each of these bags stores a patient litter and five individual PATC kits. The individual kits contain a tourniquet, emergency compression dressing, compressed gauze, medical gloves, trauma shears, two chest seals, a survival blanket, a permanent marker, a mini duct tape roll, and a just-in-time instruction card. The expansion of the PATC capability throughout Montgomery College campuses provides an invaluable medical resource for prompt care of an injured victim.

Upon completion of all the necessary EHP documentation and paperwork, MDERS supplied Montgomery College with PATC bags and cabinets, which Montgomery College promptly installed in 90 locations across their facilities. Each of these bags stores a patient litter and five individual PATC kits. The individual kits contain a tourniquet, emergency compression dressing, compressed gauze, medical gloves, trauma shears, two chest seals, a survival blanket, a permanent marker, a mini duct tape roll, and a just-in-time instruction card. The expansion of the PATC capability throughout Montgomery College campuses provides an invaluable medical resource for prompt care of an injured victim.

In March 2022, nine members of the Maryland-National Capital Region Emergency Response System (MDERS) stakeholder community began Louisiana State University’s (LSU) 10-week Homeland Security Specialist MicroCert program. Participants included representatives from several stakeholder agencies: the Prince George’s County Police Department (PGPD), Prince George’s County Fire Department (PGFD), Montgomery County Office of Emergency Management and Homeland Security (OEMHS), and MDERS.

In March 2022, nine members of the Maryland-National Capital Region Emergency Response System (MDERS) stakeholder community began Louisiana State University’s (LSU) 10-week Homeland Security Specialist MicroCert program. Participants included representatives from several stakeholder agencies: the Prince George’s County Police Department (PGPD), Prince George’s County Fire Department (PGFD), Montgomery County Office of Emergency Management and Homeland Security (OEMHS), and MDERS.

To prepare for a potential response to individuals exposed to unknown hazardous substances, seven hospitals within the Maryland-National Capital Region participated in a series of First Receiver Operations Training (FROT) course offerings throughout the Spring of 2022. These two-day trainings educated first receivers on the knowledge, skills, and abilities to recognize a hazardous material exposure, triage patients, initiate field treatment, conduct decontamination, and transition patients to definitive care.

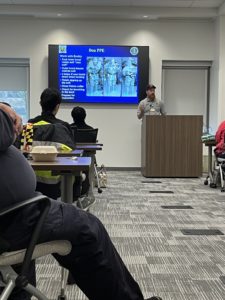

To prepare for a potential response to individuals exposed to unknown hazardous substances, seven hospitals within the Maryland-National Capital Region participated in a series of First Receiver Operations Training (FROT) course offerings throughout the Spring of 2022. These two-day trainings educated first receivers on the knowledge, skills, and abilities to recognize a hazardous material exposure, triage patients, initiate field treatment, conduct decontamination, and transition patients to definitive care. During the second day of training, students received hands-on instruction and demonstrated their ability to properly configure a field decontamination site, including hot, warm, and cold zones, while wearing full PPE. Each of these decontamination sites includes an inflatable shelter with running water to augment permanent infrastructure inside healthcare facilities. Students practiced implementing triage methodologies, deploying equipment, and facilitating both ambulatory and non-ambulatory patient decontamination practices.

During the second day of training, students received hands-on instruction and demonstrated their ability to properly configure a field decontamination site, including hot, warm, and cold zones, while wearing full PPE. Each of these decontamination sites includes an inflatable shelter with running water to augment permanent infrastructure inside healthcare facilities. Students practiced implementing triage methodologies, deploying equipment, and facilitating both ambulatory and non-ambulatory patient decontamination practices.

Director Luke Hodgson, Planning & Organization Program Manager Samuel Ascunce, and Finance & Logistics Program Manager Lauren Collins outlined to attendees how MDERS has established a capability development process which builds, implements, and sustains critical response capabilities in Prince George’s and Montgomery Counties. At the core of the capability development process is the POETEE model, which consists of Planning, Organization, Equipment, Training, Exercise, and Evaluation. MDERS continues to successfully apply and adapt the capability development process across the Maryland-National Capital Region response enterprise to better strengthen stakeholders’ abilities to respond to a multitude of planned and unplanned operations. Participants gained the knowledge, tools, and best practices for building and/or enhancing capabilities within their jurisdictions.

Director Luke Hodgson, Planning & Organization Program Manager Samuel Ascunce, and Finance & Logistics Program Manager Lauren Collins outlined to attendees how MDERS has established a capability development process which builds, implements, and sustains critical response capabilities in Prince George’s and Montgomery Counties. At the core of the capability development process is the POETEE model, which consists of Planning, Organization, Equipment, Training, Exercise, and Evaluation. MDERS continues to successfully apply and adapt the capability development process across the Maryland-National Capital Region response enterprise to better strengthen stakeholders’ abilities to respond to a multitude of planned and unplanned operations. Participants gained the knowledge, tools, and best practices for building and/or enhancing capabilities within their jurisdictions. Training and Exercise Specialist Hannah Thomas and Lt. John Berry from the City of Rockville Police Department facilitated a discussion with attendees on MDERS’s Tabletop in a Box program. This systematic, scalable, and economical approach for developing critical capabilities through readily deployable exercises, has been implemented throughout the Maryland-National Capital Region across a multitude of law enforcement, fire, rescue, and emergency medical services (EMS), healthcare, and public health organizations. Conference attendees participated in a Tabletop in a Box exercise during the conference, responding to a severe weather emergency in the Ocean City area leading up to a holiday weekend.

Training and Exercise Specialist Hannah Thomas and Lt. John Berry from the City of Rockville Police Department facilitated a discussion with attendees on MDERS’s Tabletop in a Box program. This systematic, scalable, and economical approach for developing critical capabilities through readily deployable exercises, has been implemented throughout the Maryland-National Capital Region across a multitude of law enforcement, fire, rescue, and emergency medical services (EMS), healthcare, and public health organizations. Conference attendees participated in a Tabletop in a Box exercise during the conference, responding to a severe weather emergency in the Ocean City area leading up to a holiday weekend. Training and Exercise Specialist Hannah Thomas, Chief Doug Hinkle from the Montgomery County Fire and Rescue Service (MCFRS), Mitch Dinowitz from the Montgomery County Office of Emergency Management and Homeland Security (OEMHS), and Lt. Victor “Tony” Galladora from the Montgomery County Police Department (MCPD) led a discussion with symposium attendees on the development of Montgomery County’s small-unmanned aerial systems (sUAS) capability over the past four years. The program, which is now fully integrated within MCFRS, MCOEMHS, and MCPD, supports a variety of emergency response needs, including advanced situational awareness and information sharing. The panel of leaders from Montgomery County provided detailed examples on how the County deploys and benefits from the capability on an ongoing basis as part of its emergency response capabilities.

Training and Exercise Specialist Hannah Thomas, Chief Doug Hinkle from the Montgomery County Fire and Rescue Service (MCFRS), Mitch Dinowitz from the Montgomery County Office of Emergency Management and Homeland Security (OEMHS), and Lt. Victor “Tony” Galladora from the Montgomery County Police Department (MCPD) led a discussion with symposium attendees on the development of Montgomery County’s small-unmanned aerial systems (sUAS) capability over the past four years. The program, which is now fully integrated within MCFRS, MCOEMHS, and MCPD, supports a variety of emergency response needs, including advanced situational awareness and information sharing. The panel of leaders from Montgomery County provided detailed examples on how the County deploys and benefits from the capability on an ongoing basis as part of its emergency response capabilities. Finance & Logistics Program Manager Lauren Collins and Emergency Response Planning Specialist Peter McCullough provided attendees with a deep-dive look at the origins, implementation, and expansion of the Public Access Trauma Care (PATC) in Prince George’s and Montgomery Counties. Designed to educate, equip, and empower bystanders to provide life-saving medical aid in the critical minutes before responders arrive on-scene, the PATC program has been deployed across the Prince George’s and Montgomery County public school systems. Participants learned about the step-by-step process through which MDERS developed and deployed the PATC program, as well as how the program has already helped save lives in the Maryland-National Capital Region.

Finance & Logistics Program Manager Lauren Collins and Emergency Response Planning Specialist Peter McCullough provided attendees with a deep-dive look at the origins, implementation, and expansion of the Public Access Trauma Care (PATC) in Prince George’s and Montgomery Counties. Designed to educate, equip, and empower bystanders to provide life-saving medical aid in the critical minutes before responders arrive on-scene, the PATC program has been deployed across the Prince George’s and Montgomery County public school systems. Participants learned about the step-by-step process through which MDERS developed and deployed the PATC program, as well as how the program has already helped save lives in the Maryland-National Capital Region.

Broadcast from Howard University’s WHUT studios in Washington, D.C., the first day of the symposium analyzed the events leading up to, during, and following the January 6th insurrection at the United States Capitol. Leaders from the District of Columbia Homeland Security and Emergency Management Agency (HSEMA), District of Columbia Fire and Emergency Medical Services (FEMS), and MedStar Washington Hospital Center, provided their unique experience tackling the complex issues faced by their agencies throughout the COVID-19 pandemic, the 2021 Presidential Inauguration, and the January 6th insurrection.

Broadcast from Howard University’s WHUT studios in Washington, D.C., the first day of the symposium analyzed the events leading up to, during, and following the January 6th insurrection at the United States Capitol. Leaders from the District of Columbia Homeland Security and Emergency Management Agency (HSEMA), District of Columbia Fire and Emergency Medical Services (FEMS), and MedStar Washington Hospital Center, provided their unique experience tackling the complex issues faced by their agencies throughout the COVID-19 pandemic, the 2021 Presidential Inauguration, and the January 6th insurrection.

Symposium attendees engaged in real time with the speakers through the Zoom Video Communications platform to ask questions or weigh in on the topics discussed.

Symposium attendees engaged in real time with the speakers through the Zoom Video Communications platform to ask questions or weigh in on the topics discussed.